Google’s New AI Could Detect Illness from Cough Sounds

In a pioneering development that could change diagnostic practices, Google has unveiled an artificial intelligence (AI) system capable of analyzing human sounds, such as coughs, to potentially detect diseases. Dubbed Health Acoustic Representations (HeAR), this groundbreaking technology showcases the tech giant’s advances in audio-based disease diagnosis using machine learning.

The Power of Sound Analysis and Audio Data

While traditional medical diagnostics heavily rely on visual data like X-rays and MRI scans, Google’s HeAR system explores the untapped potential of audio data. By training on an astounding 300 million audio clips of human sounds sourced from YouTube, the AI model has developed an intricate understanding of the nuances in coughs, breathing patterns, and other audio cues that could indicate underlying health conditions.

Non-Invasive and Accessible Diagnostics

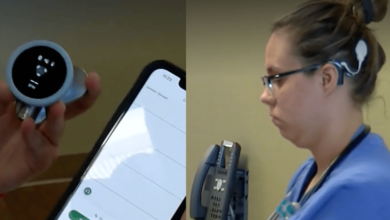

One of the most significant advantages of HeAR is its potential to provide non-invasive and accessible diagnostic methods. Unlike traditional diagnostic procedures that can be invasive, time-consuming, and costly, audio-based analysis offers a simple and convenient alternative. Patients could potentially record their coughs or other sounds using a smartphone or a dedicated device, enabling doctors to analyze the audio data remotely and identify potential health issues.

Transforming Diagnostic Practices: Early Detection and Proactive Care

By analyzing cough patterns and other audio cues, the AI system may be able to identify early signs of respiratory conditions, such as pneumonia, bronchitis, or even lung cancer, before more severe symptoms manifest. This early detection capability could enable proactive intervention and improved patient outcomes.

Overcoming Language and Geographical Barriers

Another significant advantage of audio-based disease diagnosis is its potential to overcome language and geographical barriers. Unlike traditional diagnostic methods that rely heavily on verbal communication, HeAR’s analysis is based solely on audio data, making it accessible to patients from diverse linguistic and cultural backgrounds. This could be particularly beneficial in remote or underserved areas where access to healthcare resources is limited.

Refining Accuracy and Addressing Privacy Concerns

While the potential of HeAR is undeniable, there are still challenges to overcome. Ensuring the accuracy and reliability of the system’s predictions is paramount, as misdiagnosis could have severe consequences. Additionally, addressing privacy concerns regarding the collection and use of audio data will be crucial for widespread adoption.

As the technology continues to evolve, it could pave the way for a new era of audio-based disease diagnosis, transforming the way healthcare professionals approach diagnostic practices.